What Is RAG in AI: A Simple Guide to Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) in AI pulls in external data to improve the accuracy of model responses. This technique ensures outputs are current and contextually relevant. In this article, we’ll cover what is RAG in AI, how it works, and its advantages.

Understanding Retrieval-Augmented Generation (RAG)

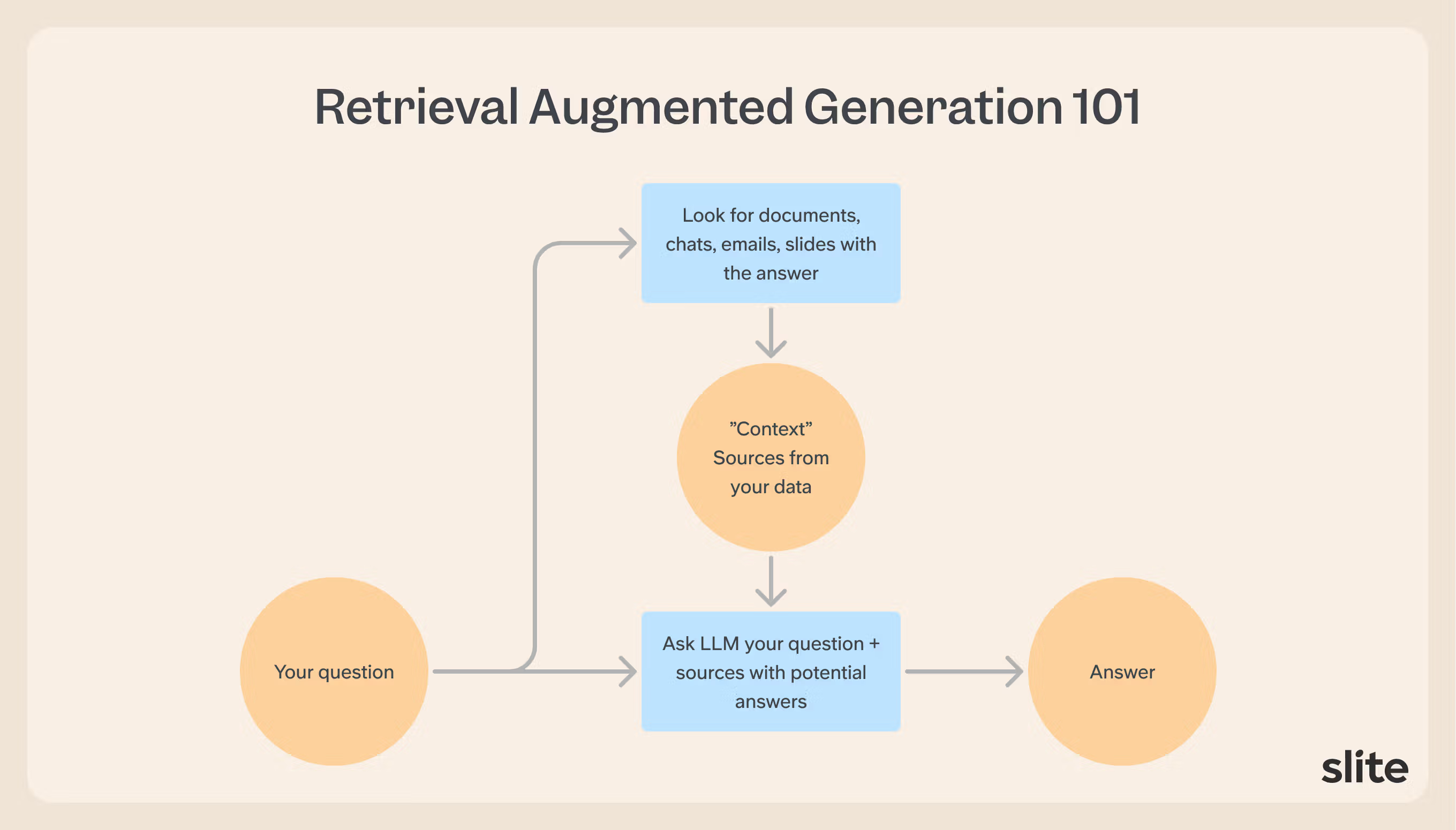

RAG (Retrieval-Augmented Generation) is how AI accesses specific information. It combines information retrieval and language generation to enhance responses.

The system works through several key steps:

- It indexes all available documents, maintains location references, and provides relevant context to the AI

- Documents are converted into vector embeddings, creating topical clusters and relevance markers

- When receiving a query, it processes the question, searches the vector database, and identifies relevant context

- The system extracts relevant information and provides it to the base model for response generation

Key advantages of RAG include:

- Higher accuracy through source documents

- Current information access

- Source attribution

- More reliable and verifiable responses

This approach solves a fundamental problem with standard AI models, which contain general knowledge from their training data that is typically outdated by months or years. RAG bridges this gap by allowing access to current documentation, recent events, and private databases.

In practical implementation, RAG has shown significant results, achieving a 63% improvement in response accuracy by integrating external knowledge retrieval with LLM generation.The Evolution of RAG

Retrieval-Augmented Generation (RAG) can be traced back to the 1970s, marking an era when initial models of question-answering systems were being conceived by innovators in the field. These formative stages set a solid foundation for advancements in natural language processing technologies that would follow.

It wasn’t until Ask Jeeves emerged in the mid-1990s that such technology began capturing widespread attention through its facilitation of natural language-based queries.

In a significant leap forward for RAG technology, researchers from Meta published an influential study led by Patrick Lewis in 2020 which not only established the acronym ‘RAG’ but also unveiled a new architecture for applying this concept within modern AI frameworks.

Since then, Retrieval-Augmented Generation has been pivotal to state-of-the-art research and innovation within artificial intelligence circles, inspiring numerous scholarly articles and practical implementations across diverse applications related to natural language processing and question answering systems.

How RAG Enhances Large Language Models

RAG enhances large language models in several key ways:

- It bridges the gap between general knowledge and current information by connecting AI to ecific data sources

- It enables higher accuracy through source documents, current information access, and source attribution, making responses more reliable and verifiable

- It separates the LLM's ability to generate responses from their ability to retrieve factual knowledge

- It provides a 63% improvement in response accuracy by integrating external knowledge retrieval with LLM generation

- It allows AI to look up facts instead of guessing or making things up

- It enables knowledge updates without requiring model retraining

The system works by:

- Converting documents into vector embeddings

- Creating topical clusters and relevance markers

- Processing queries to identify relevant context

- Extracting information and providing it to the base model for response generation

RAG’s architecture is comprised of distinct segments dedicated to processing inquiries, fetching relevant information from external databases, and crafting coherent responses. Such a systematic method mitigates common pitfalls such as inaccuracies and fabricated statements often seen in standard AI models. Consequently, owing to these advancements brought by RAG technology, it is witnessing escalated deployment within various industries aiming at improving decision processes and enhancing consumer engagement.

The Mechanics of RAG

Retrieval-Augmented Generation (RAG) employs a sophisticated sequence of steps to improve AI-generated answers. Initially, this method involves breaking down large volumes of data into more manageable chunks for better handling. Following this, the user’s query is encoded into vector form through an embedding procedure that facilitates matching with entries in vector databases.

After fetching pertinent information from these databases, RAG enhances the input by utilizing tactics such as prompt engineering and structuring document excerpts. The augmented input then serves as a foundation for producing responses that are not only precise but also contextually apt.

RAG possesses mechanisms for automatically refreshing external documents along with their respective vectors in its database to maintain up-to-date accuracy and relevancy of information used during retrieval.

Key Components of a RAG System

Here are the key components of a RAG System:

- Document Processing System

- Converts documents into vector embeddings

- Creates topical clusters and relevance markers

- Maintains indexed references

- Knowledge Base

- Contains gathered information for AI access

- Stores vector embeddings

- Uses document chunks of around 512 tokens

- Retrieval System

- Processes incoming queries

- Searches vector database

- Uses hybrid approach combining:

- BM25 for keyword precision

- Dense retrieval for semantic understanding

- Includes cross-encoder reranking

- Integration Mechanism

- Extracts relevant information

- Provides context to the base model

- Uses dynamic prompt construction

- Maintains sliding window of context tokens (2048)

- Generation Component

- Combines retrieved information with language skills

- Produces natural language answers

- Creates responses using source documents

During the retrieval stage, a procedure referred to as constructing a knowledge base is carried out in order to streamline the access of information. This step converts data into vector embeddings—numerical array representations that make the content interpretable by machines.

Subsequently, in the generation phase, external information retrieved previously is integrated with the user query to augment responses produced by a large language model (LLM). Such a bifurcated framework guarantees that responses furnished by artificial intelligence are not only precise but also pertinent, drawing upon up-to-date data sources.

Vector Databases

Vector databases are essential to the functionality of RAG systems, as they house embeddings and offer innovative search options for vector matching. Embedding conversion takes segments of text and turns them into vectors that a vector database holds, streamlining the process for fast and relevant data retrieval in line with user queries.

While not absolutely mandatory for RAG systems, incorporating embeddings or vector databases markedly improves their retrieval capabilities. By correlating embeddings with original source data, generative models can produce more precise answers and refresh indices within knowledge bases to maintain up-to-date and trustworthy information.

Semantic Search

Semantic search operates by identifying and retrieving documents that have a high degree of similarity to the query’s embeddings, in contrast to traditional keyword search which is dependent on precise word matching. By grasping the intent behind user queries, semantic search transcends conventional methods, enabling it to furnish more pertinent information effectively. The robust comprehension of natural language questions paves the way for responses that are not only accurate but also fitting within context.

Research developments from entities such as Facebook AI Research—currently known as Meta—have empowered semantic search technologies to adeptly navigate through both structured and unstructured data sourced from an array of outlets including enterprise repositories and web pages. Such advancements underscore its vital role within RAG systems by ensuring they deliver germane insights when responding to user inquiries.

Applications of RAG in Various Industries

The RAG system’s adaptability renders it suitable for numerous sectors. It can tap into different information reservoirs, encompassing private content such as emails, notes, and articles, enabling the delivery of thorough responses that reflect current knowledge. The external data incorporated into RAG may be sourced from APIs, databases, document collections among other channels, thereby widening the range of accessible information.

RAG utilizes mathematical algorithms to ascertain how pertinent gathered documents are in relation to user queries. This process not only augments user engagement but also elevates the operational proficiency of conversational interfaces. Concurrently, researchers are investigating ways to amalgamate RAG with additional AI methodologies with an aim to Advance these interactive experiences.

Customer Service

Within the domain of customer service, RAG empowers users to engage in interactive queries within data repositories, expanding the capabilities of generative AI. By implementing RAG, chatbots that cater to customers can offer responses that are both precise and relevant to the context, greatly improving user engagement. Consequently, this advancement facilitates a more prompt resolution of customer issues, which leads to enhanced response efficiency and heightened overall satisfaction for users.

Chatbots equipped with RAG technology optimize customer interaction by delivering exact and relevant answers tailored to user queries. This method goes beyond simply responding accurately. It fosters an engaging and streamlined experience in customer service encounters.

Healthcare

In the realm of healthcare, the implementation of RAG is instrumental in devising systems capable of delivering exact responses to health-related questions through accessing large-scale medical data repositories. This enables healthcare practitioners to obtain information promptly, which augments patient treatment and bolsters research proficiency. By amalgamating RAG with existing protocols, professionals in the medical field can distill relevant details from comprehensive clinical records, thereby refining their decision-making process concerning patient care.

By harnessing RAG technology, there is a notable improvement in engaging patients as it supplies them with customized health education that resonates with their unique needs and comprehension levels. Such individualized communication guarantees that patients are presented with understandable and accurate health facts pertinent to them—this strategy promotes enhanced outcomes for patient health management.

Finance

In the financial sector, Retriever-Augmented Generation (RAG) bolsters generative AI models that are essential for delivering precise responses to intricate inquiries. Financial entities utilize RAG to incorporate external data sources effectively, ensuring swift and timely responses to customer questions. The utilization of RAG within finance extends across automating client support systems, improving compliance verifications, and offering tailored advice for investments.

When conducting risk evaluations, RAG is instrumental by aggregating pertinent information from a multitude of data points to scrutinize market dynamics. This enhancement in data extraction empowers financial consultants with rapid access to up-to-date knowledge while they engage with their clientele.

Through fostering fast and accurate communication informed by current insights provided by generative AI models like RAG, financial institutions secure an advantageous position in serving their customers’ needs promptly and proficiently.

Benefits of Using RAG in AI

Utilizing RAG in AI offers several advantages:

- It bolsters the reliability of generative AI models by integrating verifiable data from external references.

- By merging this information, it significantly reduces instances of erroneous ‘hallucinated’ responses and heightens factual preciseness as well as dependability.

- RAG garners user trust and credibility by providing credible sources to back up its answers.

RAG plays a critical role in refining the interpretation of user queries, which decreases the likelihood of incorrect replies while enhancing performance on tasks that require extensive knowledge. Its capability to assimilate exclusive data without needing specialized training for models guarantees that these AI models are constantly refreshed, thereby boosting their proficiency in managing knowledge. Consequently, RAG serves as an indispensable instrument for elevating both the quality and integrity of AI-generated outcomes.

Real-World RAG Implementation: Building Enterprise Search

At our company, we've personally experienced the transformative power of RAG through building Super, our enterprise search platform. When we set out to create the world's most accurate workplace search, we knew traditional keyword-based approaches wouldn't suffice for the complexity of modern business environments.

Our RAG implementation journey taught us several crucial lessons. First, the quality of your vector embeddings determines everything—we spent months fine-tuning our document processing to understand context across different business tools like Slack conversations, Linear tickets, and Google Drive documents. Second, the retrieval mechanism needs to be lightning-fast; our users expect answers in seconds, not minutes.

The results exceeded our expectations. Our RAG-powered search achieved remarkable accuracy improvements:

- 4 hours saved per person per week through intelligent information retrieval

- Repetitive questions down by 90%+ for Super's first customers

- 140% open rate for automated digest reports that pull insights across all company tools

What made our implementation unique was combining semantic search with source attribution—every answer shows exactly where information came from, with confidence scores. This transparency became crucial for enterprise adoption, as teams needed to trust and verify AI-generated insights.

The most rewarding outcome has been watching teams transform their workflows. Instead of spending time hunting through multiple tools, they ask natural language questions like "What's blocking Project Alpha?" and get comprehensive answers that pull from project management tools, team discussions, and documentation—all with clear source citations.

If you're curious about how enterprise RAG can transform your team's information access, book a demo to see our implementation in action.

Challenges and Considerations

The deployment of RAG, while beneficial, presents its own unique set of challenges that need careful attention. Integrating and sustaining connections with external data sources demands significant technical investment. The efficiency of retrieval is influenced by aspects such as the volume of the data source, network delays, and query frequency. Hence it’s crucial to manage these variables adeptly to cut down on both computational and financial costs.

Ensuring accurate attribution in AI-generated content becomes increasingly difficult when amalgamating information from various sources. In cases where third-party data contains sensitive personal details, strict adherence to privacy laws is mandatory.

Looking ahead, advancements in RAG technology might enhance processing real-time information thereby reducing inaccuracies within AI-produced material.

The Future of Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) is poised for a thrilling future. Since its introduction in the landmark 2020 paper, RAG has been a catalyst for numerous scholarly articles and real-world implementations within its domain. Developers are now equipped to update models on-the-fly, granting access to up-to-date knowledge that improves both the accuracy and contextually relevant aspects of outputs generated by artificial intelligence.

As advancements continue in RAG technology, there’s an intent focus on incorporating mechanisms for dynamic retrieval of external knowledge sources. This will empower AI systems to operate with heightened specificity within intricate settings that demand instantaneous recognition of context. The evolution of RAG promises enhanced contextual aid. AIs may soon be able not only to surface pertinent information but also customize insights specifically tuned to user objectives.

Wrapping up

RAG marks a big milestone in AI. This technology merges live data retrieval capabilities with generative AI models to improve the precision, pertinence, and reliability of outputs produced by AI systems. Across various sectors such as customer support, health services, and financial management, RAG is revolutionizing how industries operate by facilitating interactions that are more contextually appropriate and demanding.

As advancements in RAG proceed, it stands poised to offer even more significant enhancements—positioning AI as an essential utility in everyday scenarios.