How to speed up the feeling of real-time collaboration (without doing much at all)

Hey, I'm Pierre, CTO at Slite, and I'm here to discuss a topic close to our cold developers' hearts: speed. Specifically, speed of Docs, Smart tables, Discussions, and real-time collaboration and editing. Let me kick things off by contradicting myself.

Speed does not always matter

Speed is not relevant for many technical aspects of a product. Sometimes, slowness can provide better value. For instance, delayed notifications. You might want the system to wait 30 sec before sending a notification. Just to be sure the author didn't remove their message, or the notification isn't part of a batch you can send as a group - saving the user time and focus.

However, when it comes to browsing docs, searching or organising them, speed does matter. And we care a lot about speed at Slite.

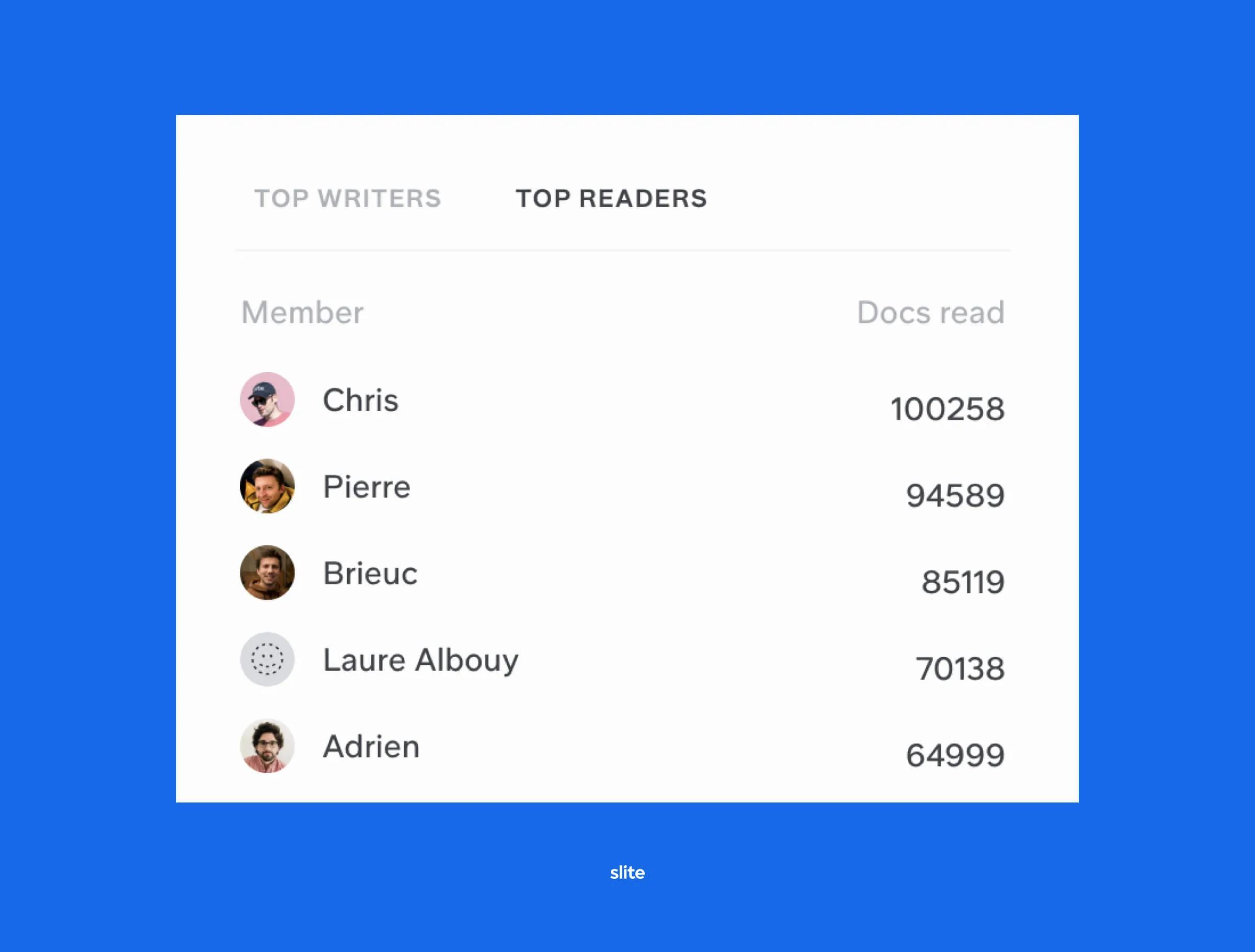

To prove it, a screenshot of our team's Slite statistics: I'm the #2 reader, not because I read more, but because I constantly switch between docs to check if we're getting faster.

Besides my own personal obsession, we build speed into our workflow for a few reasons:

- Collaboration is impossible without speed: docs need to update in real-time so contributors can edit together seamlessly.

- Speed is table stakes: gone are the days when people expected long load times for sites - lags diminish user experience.

- No one uses one doc at a time: Personally, I have about 5 internal docs open at one time, minimum. If the app is slow, I could be toggling with outdated information.

- Decisions can't wait: Think 'async' and 'fast' are antonyms? Think again. When async discussions update in realtime, decisions arrive faster, too.

Because of the thinking behind why we prioritise speed, we consider it a key product feature. Here's how we do it.

Optimising your product to favor speed

You can always push technical implementations to be faster. But often, the best speed optimisations are found by understanding user behaviours, and following the golden paths they use. Check out this product requirements document guide to stay on track.

Some examples of how we make docs and discussions populate at lightning speed:

'Optimistic' loading

When a user hovers over a Slite Discussion, we preload the information contained within that particular thread. If the user decides to click on it (and she does, 90% of the time) the discussion will be loaded instantly. The loading time has been performed between the mouse-hover and mouse-click events.

Reuse previously fetched data

When users browse their Slite, they will navigate between a dozen docs. We monitor that a doc loading never exceeds 200ms. And if you come back to this doc, we'll display the previously fetched informations, while doing a query in the background to check if anything changed between the two visits.

Pick best-in-class services

Building a performant search engine is a computer science topic in itself. That's why we've always relied on Algolia to do it for us. We index the content there, and do a search query at every characters users type in the input. Our average search response time is 20 milliseconds.

Throttle real-time data

We could send each and every character a user types to the other participants on a doc. But it affects our server load and also the performance of the editor. People using Slite would receive updates too often, blocking their typing. In the third month of Slite's existence, we took time to throttle the updates sent to 600ms. And we batch them, so it feels like real-time, even though we send the necessary changes as chunks of data.

The odds are, people who use Slite barely notice these individual optimisations - but put together, they create a much smoother and more pleasant UX.

Recent tech changes we've made for speed

We originally built our entire app on Redux with global stores. This solution worked for many years, but a global state induces global app renders for any change. To make our developers' lives a bit easier, and also to split concerns, we recently made some speed-oriented decisions:

- We created a @slite/data package to offer internally optimised way to fetch and use data without thinking about speed. We have an internal group of developers making sure its performant and provides enough options.

- We use zustand to manage global UI states and local states easily.

As an example of the second, here's how we handle focus mode in 30 lines of code:

Some speedy stats

To wrap up, I want to summarize the progress we've made. Speed is one of the most measurable performance metrics, and we've got lots of data to prove we're on the right track.

- Collections loading: Our smart database tool is responsive at 100 cells, loading time < 200 ms at p95

- Switching between docs occurs at 120ms p95 (was 250ms before)

- Search response time: 23ms on average

Hopefully this convinces you to rethink your app speed, and make it a part of your next roadmap. Thanks for reading 👋 and hope to see you in a Slite doc soon.

Written by Pierre Renaudin

Pierre Renaudin is Slite's co-founder and CTO. As a prior Ruby on Rails fan, Pierre worked for Azendoo before founding Slite. He lives with his companion in Nantes, France and is a Zwift, Music and Cooking enthusiast. Find him @pierrerenaudin on Twitter